Introduction

NPS is a widely used methodology to measure CX. In fact, it’s nearly ubiquitous and has gained a massive following around the world, primarily because of its simplicity and equally importantly, as a measure of predicting customer loyalty – and in turn, profitability.

We also know that Customer Churn is the single measurement that can sustain companies through good and bad times. However, determining and more importantly predicting Churn without a data-science team dedicated to this is nearly impossible. Most data-science teams look at _behaviors_ and try to correlate a multitude of behavioral variables to exit variables. While this is theoretically true, this almost never gives researchers the “Why” – it only gives a co-relational model – not a causational model.

In this whitepaper, we show an enhancement to the NPS model – to include an operationally & cognitively easy way to;

- Isolate & Identify Reasons for Churn (Root Cause) et al.

- Auto-Identification – Establish a model for dynamically and automatically updating the reasons.

- Predicting Churn – Based on the Reason-Identification comparisons of Passives Vs. Detractors.

Table of Contents

- Introduction

- NPS Programs – Use Existing Platform/Process/Models

- Isolation

- Identify

- Predict

- Uber: Live Examples of Churn Identification

- Stated Vs. Actual Intent – Saturated Markets

- Conclusion

NPS Programs – Use Existing Platform/Process/Models

Exit / Cancellation Surveys

Most NPS Programs already have models in place for contacting customers after they cancel/churn and determine reasons for churn. This is post-facto and while it gives good directional indicators, operationalizing this has been challenging. This is partly because reasons change over time and there is no “Base” comparison. By base comparison, we mean that if we are only surveying customers who are canceling/churning, then it’s very difficult to map that against folks who are NOT churning – existing customers.

Ongoing NPS / Check-In Surveys

Companies that use NPS as an ongoing basis for existing customers can use that same model to determine and predict churn. The benefit of using on-going operational surveys to determine Churn is that it is already in place – the ability to deliver emails/sms notifications, post transactions and collect data.

While it’s easy to determine NPS, dynamically determining root-cause / isolating and identifying root causes has been relegated to text analytics around “Why?”

For surveys, most text analytics models don’t work, partly because of NLP/Training data. Good Machine Learning models need a lot of training data to increase accuracy.

In this solution, we functionally determine the “Why” answer by using the collective intelligence of the customers themselves.

Isolation

The first task at hand is Isolation. By isolation, we mean determining the top one or two reasons why someone has the propensity to churn. Every product / service has some unique and key elements that determine customer affinity and inversely determine customer churn. The first key idea here is to isolate these key reasons. This can’t be done using text analytics, simply because AI/NLP tools are still not good enough to determine “Weight” around different reasons from simple text.

We can spend a lot of energy, time and effort around claiming that AI/NLP tools will one day get there, but it is 2020 and I’ve yet to meet a customer that can confidently tell me that their trust in their NLP model is high enough to make this determination.

We are showcasing a much simpler approach to this. We let the customer choose between a set of “reasons” when they go through an NPS survey. Here is an example below;

We allow users to choose between 1, 2 or 3 items – this is needed for isolation. There may be many reasons for churning, but we are forcing respondents to choose the “top” reasons for churn. This process allows for the isolation of the reasons automatically.

Identify

The next part is concrete identification. The typical argument against using a model like this, where the reasons are pre-determined, is that these reasons may not be exhaustive. This is correct. To solve for this, we include an “Other” option. It allows for the respondent to enter their own reasons for giving the NPS Score.

There are 2 key ideas that apply here.

- Focused Context – We don’t want respondents to ramble on about many different things. We need to identify the primary reason and since the primary reason is not in the options list, they can enter the reason.

- Limited Text – We also know that limiting the text to 1-2 lines (like Twitter’s 140 Characters) focuses the customer’s attention and zeros in on the root cause. It also allows for topic identification as opposed to sentiment analysis. We really don’t need any more sentiment analysis, we already know the NPS by now. The key here is accurate topic identification.

Furthermore, we use a data/content dictionary to determine “topics” using an NLP/text model. Again, this would be much more accurate, because we are only looking for “Topics” that the user has to type in, i.e, nouns, “Server was rude” or “Not enough choices” where we are modeling this for automatic identification of the topics.

AI/ML for Topic Discovery

QuestionPro has partnered with Bryght.ai and will be using that AI/ML model for topic identification.

One of the reasons for this is that we’ve worked with Bryght’s team to develop data-dictionaries across multiple and unique verticals, including Gaming, Retail, Gig-Economy, B2B SaaS, etc. These customized data-dictionaries based on verticalized industry knowledge give us better and more focused accuracy.

Predictive Suggestions

We also do predictive suggestions, very similar to Google Suggest. As users are typing in, we show them what others have suggested. This allows users to simply choose the item, instead of exponentially expanding the data-dictionary.

Here is an example of how we would do that visually.

Predict

So far, with the Identification & Isolation models above, we can deterministically say that the reasons a, b, and c are the top reasons why folks are dissatisfied/detractors and hence the assumption is that they all have a 100% propensity to churn. We believe it is logical to use detractors to identify and isolate reasons for churn. We assume that detractors are already churned and at this point, it would be nearly impossible to prevent that.

The Passive folks are the “Undecided” – they are wavering – but could lean either way. In our prediction model, we further ask the passive folks to choose the exact same set of reasons that the detractors were given. This allows us to compare the Passive to the Detractors and accurately identify and predict the passive folks that will churn.

Let’s take a real example and go through this. Let’s assume that there are 5 reasons Detractors have chosen.

- Poor Service [80%]

- Dirty Tables [30%]

- Insufficient Menu Choices [18%]

- Pricey & Expensive [12%]

- Location [21%]

These percentages won’t add up to 100 because we’ve allowed users to choose upto 3 reasons for giving a Detractor Score. As described above – this isolation model can be anywhere from 1 -3 (Choose the top reason or the top 2 or reasons.)

For the Passives; let’s say our distribution falls as below;

- Poor Service [22%]

- Dirty Tables [10%]

- Insufficient Menu Choices [10%]

- Pricey & Expensive [20%]

- Location [20%]

Anecdotally looking at the data, we can say that Poor Service, Dirty Tables and Location are the top reasons someone will be a detractor. This also means that anyone Passive, who chooses those three items is likely to be a detractor in the next visit or in the upcoming months. There are other factors weighing against that decision, but in the absence of other counterbalancing factors, all passives who select those options are candidates for churn.

We use this model to predict Churn – the % of Passive folks who select the same “reasons” as marked as “Churn Predictors”. It gives you a predictive model on who probably will churn.

Learn more: Passive Churn NPS+ | Spreadsheet

Uber: Live Examples of Churn Identification

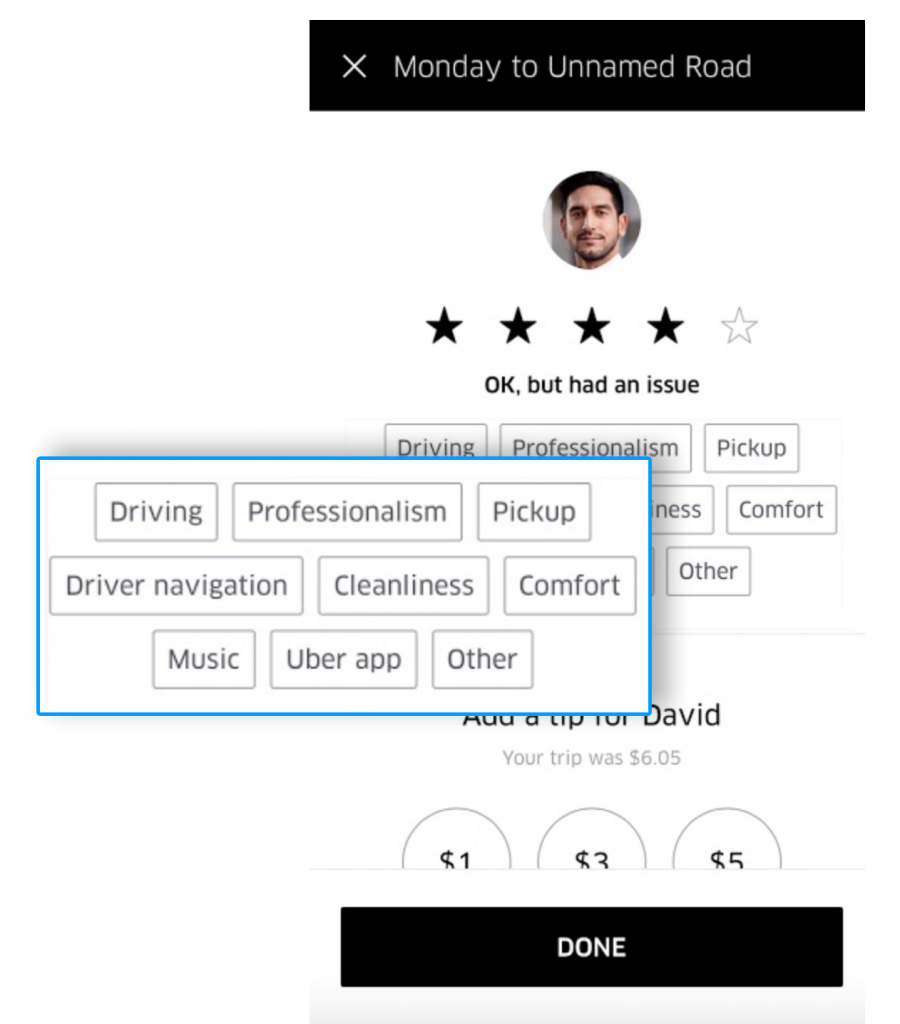

Uber uses a simplistic identification model for both promoter and detractor root-cause identification;

As you can see from the screenshot above, the user gave a 4 Star Rating – Assuming 3 & 4 Stars are passive, Uber is now trying to determine the root cause of the 4-Star rating – using a simple choice model.

Stated Vs. Actual Intent – Saturated Markets

One of the fundamental premises this model relies on is that detractors are indeed a very high churn risk. That being said, it has been anecdotally noted that in saturated markets like Insurance, Telecom et al, where the lethargy around canceling service is high, the churn metrics do not always closely match the actual churn. This is partially because of the inability of customers to switch solutions easily, insurance is a good example. In such scenarios, the churn prediction model may not yield results correctly, since we are fundamentally relying on the close proximity of stated intent to match actual intent.

However, we believe that it’s just a matter of time when the stated intent catches up to actual behavior change in terms of churn. Companies can take solace in the fact that, in saturated markets, churn is difficult but nonetheless given the pace of change, it’s coming.

Furthermore, when churn does kick in, in the saturated markets, the effect of that is generally extremely strong and no amount of tactical measures at that point can stop the hemorrhage.

Conclusion

While the efficacy of NPS has been debated for over 15+ years now, the underlying model for segregation of customers between “Advocates” – “Lurkers” and “Haters” is a fundamental construct in satisfaction and loyalty modeling. The Churn modeling that we propose is fundamentally based on the premise that “Lurkers” can be swayed, either to be “Advocates” or not.

The predictive model fundamentally relies on the principle that indifferent customers are the highest propensity to churn, in fact, even more so than passionate haters. We rely on this fundamental psychological precept to model our predictive churn process.

It flows into a central unified warehouse with mitigated tribal knowledge and uniform business taxonomy, making the research hub a one-stop-shop for everything insights. There is a greater hold on data management and accessibility, ensuring that you do not have to look in multiple places and reach out to various stakeholders to make sense of data. In this case, it also becomes simpler to manage multivariate research data.

Real-time Analytics

By using the insights repository, there is instant and real-time access to data and analytics. Not just that, with the help of intelligent labels and artificial intelligence (AI), it is possible to surface information about projects that are relevant and interesting. This feature makes using the insights repository even more lucrative for researchers and business stakeholders to leverage real-time analytics in amplified and reusable market research.